- Version

-

v1.0.1+10938074204, 2024-09-19

- Editor

-

Florian Gudat

- Module

-

Mastermodul (C533.2 Compulsory module)

https://modulux.htwk-leipzig.de/modulux/modul/6291 - Module Supervisor

-

Prof. Dr.-Ing. Jean-Alexander Müller

- Lecturer

-

Herr Prof. Dr. rer. nat. Andreas Both

Herr M. Sc. Michael Schmeißer - Institute

-

Leipzig University of Applied Sciences

- Faculty

-

Computer Science and Media

Acronyms

Namespaces

This enumeration lists the prefixes and the associated namespace. It will be used as the standard syntax for RDF prefixes. When a prefix is followed by a colon symbol, the part after the colon can be appended to the namespace URI, thereby creating a new URI.

| acl | |

| al |

dynamic (see Custom Vocabulary) |

| claim |

urn:claim# (see Custom Vocabulary) |

| ex |

example |

| foaf | |

| http | |

| interop | |

| ldp | |

| pim | |

| rdfs | |

| solid | |

| st |

The prefixes and namespaces enumerated above are applicable to diagrams, listings, and inline listings throughout the entirety of the document.

Notation

The diagrams in this document were generated using Asciidoctor Diagram 2.3.1[1] and its bundled PlantUML[2] version.

Unless otherwise specified, all framed diagrams will use the UML 2.5.1[3] standard, constrained by the limitations of PlantUML. As defined in the standard, the following abbreviations will be utilized to identify the type of UML diagram:

| cmp |

component diagram |

| sd |

interaction diagram |

| stm |

state machine diagram |

In addition to the UML abbreviation, the following abbreviations are used to identify non-UML diagrams:

| dm |

data model diagram; The information structure is entirely based on RDF, and will be presented as entity-relationship diagrams in Clive Finkelstein’s IE[4] notation, with some additional elements. The text in the double angle brackets will define the entity type (e.g., Dataset, Thing, …). The path labels indicate potential routes through the graph structure, while the number within the bracket indicates the branch that has been taken. |

| wbs |

work breakdown structure; This diagram is a decompositional diagram[5], intended for use in hierarchical structures, originally designed as a project management tool. In this context, it is used to illustrate any kind of hierarchical structure. |

All diagrams and figures presented in this work were created by the author. Any discrepancies have been highlighted in the corresponding figures.

The Solid Project is an RDF-based ecosystem that aims to achieve a decentralized web for individuals, social entities, or software. The technology is still in development and not yet fully evolved. This work proposes an experimental vendor-agnostic approach of extending Solid via a server-side application layer proxy, with the objective of increasing the traceability and access control of requested resources. It also considers the impact of this approach on the system design and performance efficiency.

This document is divided into five sections: the Introduction, Theoretical Framework, Design and Implementation, Analysis, and Reflection. The Introduction will provide a more detailed examination of the research context and the terminology utilized in this document. Fundamental concepts, such as data sovereignty and recommended quality aspects, will be summarized in the Theoretical Framework. The Design and Implementation section will explain the system design concept and the technological choices made. The Analysis section will define the experimental conditions and validate them with the quality criteria described in the Theoretical Framework. The Reflection section will discuss the considerations from the Introduction, describe future work, and conclude the overall research.

Part I: Introduction

The introduction will set out the scope of the research and define the terminology used in this context. The research chapter will begin with a motivating problem and objectives that define the interest in this research. This chapter will also define the design of this research and the concept, including the requirements. The terminology chapter will explain general Solid terms, the understanding of access monitoring, the system definitions, and the proxy design pattern.

1. Research

The topic of decentralized data processing represents a significant and complex area of inquiry within the field of computer science. One of the most significant developments in this field was the advent of the Internet. The server-client model, which separates processing into two parties, has enabled a wide range of consumers to benefit from this concept. In this regard, the use of web applications continued to grow. One of the limitations of this approach is that the data processing and storage are often shifted to the server, which increases the problem of vendor lock-in for one’s own data. In order to overcome this issue, Solid Project was introduced as a vendor-agnostic approach for identity management, data storage, and access granting. It is an RDF-based ecosystem that aims to achieve a decentralized web for individuals, social entities, or software.

The processing of data stored in the private space must be decentralized due to the decentralized nature of Solid. Given the involvement of multiple parties, resources maintained by the Solid Provider are frequently utilized. While access granting for these resources is a significant topic in the Solid Community, the actual access is not part of the scope of Solid, resulting in a lack of transparency and access control.

This thesis aims to develop and analyze a Solid-based application that aligns with the Solid ecosystem without enforcing it. The application will enhance data privacy concerns, specifically in terms of data traceability and access control. The goal is to increase visibility and track requested resources from parties within and beyond the ecosystem through access logs and their representation. These requests should be processed and logged to achieve better control over the exposed data, by monitoring them. All of these achievements should be established in a clear context and communicated through data APIs to ensure safety for new features and potential changes in Solid Providers.

1.1. Problem Definition

The backbone of the Solid project is the Solid Protocol. The protocol specifies how agents store their data securely in decentralized data storages, also known as Pods. These storage systems can be compared to secure, personal web servers for data, such as Dropbox, ownCloud, Nextcloud, or similar software. However, they differ in that they do not have a standardized and unified public API. This interface utilizes WebIDs to identify the agents within the system. As the storage owner has control over which agents can access it, they can choose to restrict access to only WebIDs, completely restrict access, or make it publicly accessible.

The Solid Community Group has been developing this technological approach since 2018. The ecosystem is currently in its early stages of development, and some specifications are still in draft form. Consequently, it may lack some features that are recommended in productive environments. This leads to the following issues:

| ISSUE-1 |

The Solid Protocol only specifies the ability to grant or deny access. It does not track the actual request for a resource. |

| ISSUE-2 |

Solid is based on RDF and therefore favors interconnected data, what increased the demand for monitoring the stored data. |

| ISSUE-3 |

There may be changes to existing specifications as they need to be improved or are still in the draft stage. |

| ISSUE-4 |

The Solid ecosystem will be expanded with newly introduced specifications or APIs. |

| ISSUE-5 |

New Solid Providers are being introduced because of their increasing popularity. |

The first and second issues describe the need for traceability to control access to one’s own data. A general problem with updates is addressed by the third through fifth issues. However, all of these concerns will affect the objectives of this research.

1.2. Objectives and Research Interest

The research has two primary objectives that align with the listed problems. The first goal is to achieve transparency regarding which data has been accessed and by whom. This would significantly increase control over one’s own data. The second objective is to find a solution that can handle the fast-moving Solid ecosystem.

The objectives described leads to the following key research question this paper aims to address:

Is there a Solid-based system design that enables increased transparency and access control for requested personal data? Can the system use the network interface in a vendor-agnostic way without a significant decrease in performance?

As answering this question in one go is difficult, it will be divided into multiple sub-questions:

| QUEST-1 |

Can a Solid-based system meet both functional and non-functional requirements without compromising system design? |

| QUEST-2 |

To what extent does the process contribute to the increase in network requests and load? |

| QUEST-3 |

Which system and test parameters influence the executed test cases? How does the influence of the parameters manifest itself? |

The listed questions cannot definitively determine whether the proposed approach is suitable for use in productive environments. However, they can reveal the vulnerable aspects of this concept to determine whether this approach should be pursued in principle.

1.3. Research Design

The research will employ two different research methodologies, namely qualitative and quantitative, to address the research objectives and research interests. Regarding the qualitative analysis, it is intended to explain how the proposed approach will affect the system design as required by QUEST-1. A quantitative analysis will quantify the expected increase in the network load required in QUEST-2 and QUEST-3.

The identified analyses will be conducted using data and insights collected from an experimental prototype.

The prototype must satisfy the specified requirements, which are derived from the problem definition.

The data set is shown in Table 1.

It contains the timeStamp of the sample and the elapsed time until the test concluded.

As a sequence of network operations is tested, a label for the individual action must be set.

In addition, the responseCode of the HTTP request will indicate whether the operation was successful or erroneous.

The URL is necessary in case further insights into the individual HTTP request are required.

In order to have comparable values, each sample will be taken with and without the considered approach.

Item |

Format |

Example |

|---|---|---|

timeStamp |

number |

1716570602084 |

elapsed |

number |

230 |

label |

string |

Created 0 |

responseCode |

number |

201 |

URL |

string |

http://proxy.localhost:4000/client100/run1716570595598_thread1_loop1_resource0 |

It should be noted that the general proxy functions of delegating and forwarding requests have been excluded from this view. There are various implementations of proxies that must not be considered in this research. However, the DPC proxy module, which runs before and after the client response and request, has been included.

1.4. Concept and Requirements

This section will address the general conceptual idea and subsequent refinements to the idea. Based on the aforementioned idea, the requirements for the prototype will be established in order to achieve the desired outcomes.

1.4.1. Concept

The concept is inspired by existing software solutions in the field of e-governance, such as X-Road from Estonia and XDatenschutzcockpit, which is currently being developed in Germany for access control of citizen data. Both systems have an embedded logging system that monitors access data. Since they are tied to the specific context in which they are used, the idea of a Data Privacy Cockpit (DPC) will be ported to the Solid ecosystem.

When applying the concept to the Solid context, two fundamental software components can be identified to solve the problems under the given premises:

| DPC Middleware |

A server-side proxy module at the application level monitors data traffic and creates logs if necessary. |

| DPC Client |

The traffic data from the reverse proxy is displayed and managed by the client. |

The concept idea’s data capturing unit, the DPC Middleware, will be implemented as a proxy to meet the requirements of vendor-agnostic software that is secure from updates, as criticized in ISSUE-3, ISSUE-4, and ISSUE-5. However, this requires the application to be a Solid application, as it communicates over HTTP APIs with the actual Solid Provider. Since every request passes through the reverse proxy, there is a high risk of resulting in an inefficient software solution.

Upon reflection on the prototype, two central issues of the concept emerge instantly. The first is the manner in which the data is captured by the DPC Middleware. The second is the means by which the DPC Client is aware of the owner or potential accessor of the data captured.

Data Capturing Strategies

In traditional approaches to data capturing, such as the logging or monitoring of access to resources on a web server, data is typically raised in a task that is executed in the background. To illustrate, in the context of Node.js[6], this may be accomplished through the use of libraries such as morgan[7] or winston[8], which can be mounted as middlewares in a web server. In the context of the proposed application, it is necessary to distinguish between requests based on their storage resource, for instance, through the use of a registry. This presents an opportunity for an agent to opt in for monitoring. This leads to two methods that are applicable in the context of how access logs can be captured.

Both options represent viable strategies for consideration. However, the permanent strategy is more restrictive and may not be optimal in this context. In contrast, the opt-in strategy requires an agent to actively initiate access logging, which aligns with the concept of consent, a significant strength of the Solid Ecosystem.

Ownership Verification

In decentralized systems, it appears that a resource cannot be directly associated with an owner. This is also the case in Solid-based systems. Despite the necessity for the system to know the ownership of the individual resource, it may not be exposed to the public. One solution to this problem is to write a randomized verification token in the space associated with the owner. This approach will be used within the prototype developed. This ensures that the requester has a minimum level of privileges to the resource space. It works in a manner analogous to other services, such as Google’s Search Console[9]. A similar challenge is faced by these services, namely that the DNS server is only aware of the domain name and IP address relationship, but lacks the information necessary to determine the ownership of the domain name.

1.4.2. Requirements

The tension between vendor-agnostic software and efficiency is a key consideration for the solution’s requirements, particularly in non-functional requirements. However, the functional requirements are straightforward.

Functional Requirements

The following list outlines the minimum functions that the prototype must have in order to answer the questions.

Non-Functional Requirements

In comparison to the functional requirements, establishing the following non-functional requirements is more challenging. However, they are even more important as they verify the general conceptual idea.

The proposed solution is based on both functional and non-functional aspects. The objective is to develop a prototype that meets the client’s requirement for traceability of accessed resources while operating accurately within a Solid ecosystem.

The conceptual idea is considered successful if the requirements could be satisfied adequately. The adequacy will be evaluated with the prototype, as described in the Research Design.

2. Terminology

2.1. Access Control and Traceability

Access Control represents one of the primary use cases of the Solid Protocol, which utilizes Authentication and Authorization. It primarily concerns the granting and revocation of access privileges, with the objective of providing a systematic response to the question of who is permitted to perform which actions at which locations and times (Fischer & Hofer, 2011).

Traceability, is defined in two distinct ways. On the one hand, it can be understood as a step-by-step examination of a program during troubleshooting. Alternatively, as in the case examined in this work, it refers to the tracing of a route of data in communication. However, it should be noted that there is no uniform specification that allows for this definition to be universally applied (ibid.).

Extending access control and traceability means enabling an option that allows the tracing of a route of data. This allows users to have greater control of the exposed data and access privileges given. This should, in general, enhance access control, which might become obscured in a growing decentralized and highly connected ecosystem.

2.2. System

Systems are defined in several ways, as Fischer & Hofer (2011) have observed. In general, a system is a combination of interrelated elements that fulfill a common purpose. In computer science, a system is an arrangement of interacting objects that is limited in scope. According to the more concrete definition, a system is the sum of all hardware and software components of a data processing system from the point of view of their interaction.

2.2.1. Network Interfaces

In the context of a data-driven web-based system, the interaction of the system is further concretized. The system must interact via data APIs over the network. This is sometimes referred to as Resource-Oriented Architecture (ROA), as defined by Richardson & Ruby (2007). ROAs are designed to facilitate the manipulation of resources through four operations: creation, reading, updating, and deletion (CRUD). In this architecture, the CRUD operations are mapped to the following HTTP request methods:

| CREATE |

The |

| READ |

The |

| UPDATE |

The |

| DELETE |

The |

2.2.2. Resource CRUD Lifecycle

When applying the CRUD network interfaces to a single resource lifecycle, every achievable state (created, read, updated, and deleted) is reached by an ingoing CRUD transition. The starting state, however, must be the created state in order to have an initial resource to work with. Until its deletion, the same resource cannot be created again. New resources, however, can be created at any time. All other states (read, updated, and deleted) are accessible from the created state as well as the updated and read state, including self-transitions. When the deleted state is the current state of the resource, the only possible transition is the recreation of the resource. This behavior is displayed in Figure 1.

It is recommended that a sequence of CRUD requests, utilising states and transitions as previously described, be executed in a sequential order. Certain sequences may allow parallel execution, however, it can lead to errors due to the occurrence of illegal transitions. An illustrative example of transitions that are executable in parallel is a sequence of only read transitions on an existing resource. These transitions should be executable in parallel without any issues, as a situation where a resource is attempted to be read before its creation cannot occur.

Another scenario to be considered is the utilisation of resources with a deeper hierarchical depth, which are typically designated with a backslash in the URL. In such instances, the resource container can be treated as ordinary resources, with a CRUD network interface and the corresponding lifecycle. However, it is not possible for a resource to exist independently of a parent container resource in the created, updated, or read state.

It is important to note that each state of the state machine can be a final state.

The transitions in this context are translated with different frequencies.

For instance, read transitions appear more often than others.

This segmentation is represented as a probability in this work.

2.3. Proxy

Gamma (2011) defines a proxy as a structural design pattern in software development that serves as a placeholder for another object to access or control. This improves efficiency and reduces complexity. In this case, the accessed object is the Solid Provider.

Proxies can be classified into a variety of groups. In this study, we focus on web proxies, as the communication between the proxy and the Solid Provider will use HTTP APIs. The categorization of proxies is based on the position within the client-server model, as well as the OSI layer in which it is operational.

2.3.1. Server-Side Proxy

The internet operates on the Client-Server model, where a client requests a resource from the server, which responds accordingly. Introducing a proxy to this model involves adding another server. This proxy server accepts requests and delegates them to the actual server. The response is then forwarded to the requesting party. However, this offers the opportunity for a proxy to isolate either the client or the server from the network. When referring to a server-side proxy, isolation of the server is described, as shown in Figure 2 (Luotonen & Altis, 1994).

2.3.2. Application Layer Proxy

In addition to its position within the network, the Proxy can also have a position in the OSI model. The model describes how data will be transported in seven layers, each with different functions. Every layer can only communicate with its closest layer, starting with the physical layer that transmits a raw bitstream over a physical medium, up to the seventh layer, the application layer. This is the human interaction layer, through which applications can access network services. An Application Layer Proxy operates within this layer.

For the sake of simplicity, the term "proxy" will be used in the following text. Despite the abbreviated term, all properties of a server-side application layer proxy will be fulfilled.

Part II: Theoretical Framework

The theoretical framework is intended to serve as a foundation for the concepts and technologies that are the subject of the research. One of the central aspects is the Solid Ecosystem, which will be explained in chapter four. The ecosystem comprises several elements, and in this chapter, in particular, the Solid Protocol, Solid Provider, Solid Application Interoperability, and its limitations will be presented. The second chapter will refer to the concept of Data Sovereignty within the ecosystem. In this context, the concept of data trustees, the strategies for data capturing and the verification of ownership will be explained. Finally, the Quality Model will be demonstrated, which is necessary to perform an analysis of the system design and performance efficiency.

3. Solid Ecosystem

The Solid Project is an RDF-based ecosystem that aims to achieve a decentralized web for individuals, social entities, or software. The ecosystem comprises a number of specifications and associated technologies, which are primarily listed in the Solid Technical Reports[11]. The most fundamental specification is the Solid Protocol, also known as the core specification. In addition to the core specification, the following sections will explain the role of Solid Providers in enabling the protocol and the Solid Application Interoperability specification, which extends the protocol.

3.1. Solid Protocol

According to the Mozilla Developer Network (2023), a protocol is a set of rules for exchanging data between computers. Fischer & Hofer (2011, p. 706) provide additional clarification on this topic. The protocol includes setup, termination, collision prevention or correction, content integrity, and the actual actions within the same layer.

The Solid Protocol is a type of protocol that encapsulates a modified set of new and existing specifications to fit into the ecosystem. The Protocol Specification includes additions and adoptions, or references to the original ones (Sarven et al., 2022). The specifications included here are only representative of a subset of the definitions relevant to this research, primarily focusing on special http headers, resources, representation, identity, authentication and authorization.

3.1.1. HTTP Headers

The Solid Protocol employs a specific model of the relationships between resources on the Web and the types of these relationships. These are exposed as HTTP headers, linking to the related resource, as defined by Nottingham (2017).

HTTP Link headers are conventional HTTP headers, with the header field Link and a value separated by a colon.

Within the header value, the uri-reference points to the related resource, while the attribute value pair defines the relation.

This is demonstrated in Listing 1.

Link: <uri-reference>; attribute="value"In the event that multiple header values are to be applied, the values may be separated by a comma.

3.1.2. Resources

The Solid Protocol employs a variety of resource definitions, which are constructed upon one another in a hierarchical manner. These definitions either extend or restrict the previous definition, thereby forming a structured system.

The resources listed below are the most common resources in Solid and in the web in general:

| resource |

The target of an HTTP request, identified by a URI (Fielding & Reschke, 2014). |

| container resource |

A collection of resources and resource containers, organized in a hierarchical structure (Sarven et al., 2022). |

| root container |

The top-level resource within a resource container hierarchy (Sarven et al., 2022). |

Storages are spaces of URIs that affords agents controlled access to resources. The storage resource, however, is the root container for all of its contained resources and is owned by an agent (Sarven et al., 2022).

Storage resources are defined by a specific HTTP Link header that is used to mark a given container resource as a storage resource.

This is achieved by including the rel attribute with the value type, along with a URI reference that matches pim:Storage.

The owner of this storage can be made available optionally by including the rel attribute with the value solid:owner.

This is demonstrated in Listing 2, where additional headers and the response have been omitted in the listening (ibid.).

HEAD http://proxy.localhost:4000/client/

HTTP/1.1 200 OK

[...]

link: <http://www.w3.org/ns/pim/space#Storage>; rel="type", <http://www.w3.org/ns/ldp#Container>; rel="type", <http://www.w3.org/ns/ldp#BasicContainer>; rel="type", <http://www.w3.org/ns/ldp#Resource>; rel="type", <http://proxy.localhost:4000/client/.meta>; rel="describedby", <http://proxy.localhost:4000/client/.acl>; rel="acl", <http://proxy.localhost:4000/client/.well-known/solid>; rel="http://www.w3.org/ns/solid/terms#storageDescription", <http://proxy.localhost:4000/client/profile/card#me>; rel="http://www.w3.org/ns/solid/terms#owner"

[...]As the storage resource is a top-level resource in the container resource hierarchy, all containing resources belong to a storage resource. The containing storage resource is not directly accessible via an HTTP header or any other means. However, the hierarchy can be traversed until the highest level is reached in order to identify the containing storage resource (ibid.).

Description resources represent a distinct category of auxiliary resources.

These resources provide a description of a subject resource, which is referenced by a HTTP Link header.

The rel attribute is used with the value describedby to indicate this relationship.

This is also illustrated in Listing 2 (ibid.).

3.1.3. Representation

The representation of resources is primarily in the form of RDF documents. Bergwinkl et al. (2019) introduced the Dataset specification, which represents the contents of these documents as ECMAScript objects. The Inrupt JavaScript Client Libraries[12] refined this further and introduced the terms SolidDataset and Thing. In order to facilitate the interpretation of the RDF documents, the following definitions will be used, based on the definitions mentioned:

| Thing |

A Thing is a data entity that contains a set of data or properties about the Thing. |

| Dataset |

A dataset is defined as a set of Things, represented as a RDF resource. |

3.1.4. Identity

In a decentralized platform, it is essential to have identifiable. Solid accomplishes this with WebIDs and the WebID Profile, which is associated with it (Sambra et al., 2014).

| WebID |

A WebID is a special resource of RDF type foaf:PersonalProfileDocument, which denote an agent. When a fragment identifier is contained by the URI, the WebID Profile is denoted (ibid.). |

| WebID Profiles |

A WebID Profile is a Thing that serve to uniquely describe an agent, as denoted by the WebID (ibid.). |

3.1.5. Authentication

The authentication process in Solid is based on OAuth 2.0[13] and OpenID Connect Core 1.0[14], with certain enhancements. In order for the resource servers and authorization servers to function, they must have a trust relationship with the identity providers. Furthermore, ephemeral clients are intended to be the primary use case (Coburn et al., 2022).

The authentication is mainly expected to be authorized via the Authorization Code Flow[15]. However, as it is built on top of OIDC, the Client Credentials Flow[16] is a viable option in the majority of implementations, such as CSS[17].

In both cases, an access token will be returned to the authenticating client. Which usually is a Bearer Token in OIDC Sessions. Solid-OIDC however, depends on DPoP tokens. DPoP tokens ensure that third-party web applications can not use the token, as the are protected by a a public and private key pair (Coburn et al., 2022; Fett et al., 2023).

3.1.6. Authorization

Authorization is a fundamental aspect of Solid. Each WebID-owned resource must be authorized, with either or both WAC and ACP publishing mechanisms as specified. This even applies to resources that are publicly accessible and that permit unauthenticated requests. Both mechanisms utilize ACL to grant access to a resource, for a selected agent with defined access privileges. The possible privileges for a resource are read, append, and write access, as well as control access, which is used to manage the access privileges (Sarven et al., 2022; Capadisli, 2022).

3.2. Solid Provider

A Solid Provider is a web server that uses the Solid Protocol and provides the specified APIs and functions as a service. These services can be hosted on a private server or used from public hosting providers. The most common self-hosted implementations are Community Solid Server and Node Solid Server, among others[18]. Both are available on different public hosting providers, in addition to Inrupt PodSpaces[19], which is only accessible as cloud software.

3.3. Solid Application Interoperability

The Solid Specification outlines the overall framework of the system-wide data model.

Additionally, the Solid Application Interoperability Specification[20], an extension to the Solid ecosystem, addresses application-independent design and a uniform mechanism for data discovery.

It should be noted that the Specification has not yet been fully matured or implemented by any Solid Provider.

However, it can be used in part without a full implementation of the Solid extension.

The Editor’s Draft of November 7th, 2023 introduces a mechanism for discovering registered data without requiring knowledge of the physical structure of the file system or HTTP endpoints.

An application only needs to be aware of the profile document and follow the suggested references in the specification.

Figure 3 illustrates these entities and relations.

DataType and DataElement represent a selectable data type and element, respectively.

The entities and relations in Figure 3 represent a partially implemented data registry component of the Solid Application Interoperability specification by Bingham et al. (2023).

As described in the specification, an agent must declare an interop:Agent in the personal profile document to participate in the Solid Application Interoperability Specification.

From there, one can follow the specified path, starting with the registry set, which is referred from the declared agent.

The registry set contains an interop:DataRegistry, which refers to an interop:DataRegistraion.

As the interop:DataRegistraion resource is a resource container (ldp:Container), all contained resources will apply the interop:DataRegistraion attributes.

These attributes are defined in the registered ShapeTree, which is referred to from the interop:DataRegistraion.

The registered ShapeTree defines the shape of the contained resources, by referring to the Shape.

The Shape will point to a Shape Expression once more.

The Shape Expression defines the data types of the predicates utilized in the vocabulary.

3.4. Limits of Solid

One challenge in monitoring storage resources is that the ownership of a resource is not necessarily linked to the requested resource within the storage resource. As previously mentioned in the storage resource section, the path of a URI can be reduced, path segment by path segment, to identify the containing storage. However, the storage is owned by at least one owner due to the specification. This piece of information is not mandatory, which limits the reliability of identification to the storage resource (Sarven et al., 2022).

4. Data Sovereignty

4.1. Data Trustee

In the absence of an owner for the monitored resources, a concept is required to capture data and verify ownership at a later stage. One such concept is that of data trustees, which collect data on behalf of a natural or legal person. In the context of this work, the data trustee is an agent who captures data from the owner of the storage, who is another agent. The data trustee is an independent entity of trust that acts as a mediator between the data provider and the data user, ensuring the secure and legally compliant transfer of data. The concept can be distinguished by the type of data storage, which is either centralized or decentralized, and the type of data use, which is obligatory or optional (Specht-Riemenschneider & Kerber, 2022).

4.2. Data Storage Structure

In general, data storage structures can be divided into two categories when sharing a set of data with other agents that have been granted access to the data in question. The first category is container-based, which involves storing the shared data in a single storage resource and dividing it into container resources. The second category is storage-based, which involves storing the shared data in multiple storage resources, all of which are owned by the same agent.

4.2.1. Container-Based Storage

In a container-based arrangement, all data pertaining to multiple agents is stored in a single storage resource.

A mechanism must be in place or established to enable the agent with the requisite privileges to access the data.

With WAC, two main options exist for defining an Access Object.

In this context, the acl:accessTo option denotes the resource to which access is being granted, whereas the acl:default option denotes the container resource whose authorization can be applied to a resource lower in the collection hierarchy.

Consequently, the location and structure of the storage resource are publicly visible, thereby increasing the risk of information disclosure vulnerabilities.

The process of creating a new container resource however, is well part of the Solid Protocol and thereby the usage is unified.

The process of creating a new container resource, however, is a fundamental aspect of the Solid Protocol, thereby ensuring unified usage.

An illustrative example can be found in Listing 3 (Capadisli, 2022).

POST http://proxy.localhost:4000/client/

Content-Type: text/turtle

Link: <http://www.w3.org/ns/ldp#BasicContainer>; rel="type"

Slug: new-containerThe implementation of custom mechanisms to expose data to associated agents may eliminate information disclosure vulnerabilities. However, when bypassing the authorization mechanism provided by Solid, the risk of inadvertently exposing data to an agent that does not have the appropriate permissions increases.

4.2.2. Storage-Based Storage

A storage-based approach to data storage for agents is a convenient method, as it encapsulates the data for an agent in a data space of their own.

This approach ensures that no unnecessary information is shown to the public and that the data is stored in a secure context.

As this is the purpose of a storage container, there is no individual mechanism required to ensure that the data is accidentally exposed to an agent that does not have any privileges to that data.

The acl:accessTo and acl:default mechanisms, which have been applicable in a Container-Based Storage context, can also be applied here.

However, there is a significant disadvantage to this approach over the Container-Based Storage approach.

Namely, there is no unified API in the specification.

While the creation of container resources is defined in the Solid Protocol, the creation of storage resources differs for each implementation. CSS for instance, requests a process in multiple steps, with the final step shown in Listing 4[21].

5e647928-b7f0-4357-9927-f54d66a04790.POST http://proxy.localhost:4000/.account/account/5e647928-b7f0-4357-9927-f54d66a04790/pod/

Authorization: CSS-Account-Token a3395e7c-7d3f-40a1-9dff-7fa25b48c1a9

Content-Type: application/json

{

"name": "new-pod"

}In contrast, Inrupt’s ESS, as an alternative Solid Provider, only requires a simple authorized POST request as shown in Listing 5[22].

POST https://provision.inrupt.com/5. Quality Model

The quality model is a valuable instrument for assessing the quality of a software system. The model can be applied to a variety of aspects, depending on the specific quality criteria that are to be observed. In this section, the System Design Quality and the Performance Efficiency are the aspects that are to be characterized.

5.1. System Design Quality

The determination of the quality of systems and software is a complex process that is challenging to verify. The specific approach taken may depend on the problem to be solved. Martin (2018) distinguishes between the micro architecture view, which considers low-level details, and the macro architecture view, which addresses quality concerns at an abstract level. These concerns can be divided into two categories: Component Cohesion and Component Coupling These categories can be used to determine the quality of a system design, in therms of inner and outer connections.

5.1.1. Component Cohesion

The component cohesion represent general approaches to system design, as defined by Martin (2018). It is used to specifiy components that are ment to be gouped in one package or service. In order to accive that three principles are needed to be satisfied by the system, the Reuse/Release Equivalence Principle, the Common Closure Principle, and the Common Reuse Principle.

The principles outlined here are intended for object-oriented programming, but they are also generic concepts that can be applied to any top-level view of a system, as will be in this context. Therefore, each subsystem of the proposed system will be treated as a package in the object-oriented paradigm.

5.1.2. Component Coupling

The external relationships of a component, namely the connections between one component and another, are referred to as coupling. Four principles are relevant to this matter: the Acyclic Dependencies Principle, Top-Down Design, Stable Dependencies Principle, and Stable Abstractions Principle (Martin, 2018).

These principles are to be taken into consideration when software components are aligned to each other on a top-level view. In addition to the Component Cohesion Principles, they also represent a part of the spectrum of a macroarchitectural view.

5.2. Performance Efficiency

ISO/IEC 25010 is a quality model for the evaluation of system quality. The quality model defines which characteristics are to be considered when a system is developed. It considers various aspects of a software system. In this case, the characteristics of performance efficiency are the central aspect that is observed. It represents the extent to which a system performs its functions, considering the time and throughput necessary, its efficient use of resources, and the capacity used under specified conditions, as listed below:

| Time behavior |

A system’s responsiveness and throughput are measures of its ability to perform the functions it is designed to perform. |

| Resource utilization |

The quantity and type of resources utilized by a system to fulfill its operational requirements. |

| Capacity |

The maximum limits of storage usage correspond to the system parameters. |

The time behavior is of particular importance in this quality model. As the software is a web-based system that individuals are operating with, it is crucial to consider usability. Nielsen (1993) identifies three main time limits (in seconds), which are determined by human perceptual abilities, that should be kept in mind when evaluating the performance of a system.

| 0.1s |

The point at which the user perceives the system to respond instantaneously. |

| 1s |

The maximum limit may result in a delay in the user’s cognitive process, even if the user is aware of the delay. |

| 10s |

The temporal constraint on the user’s ability to maintain their focus on the process. |

In the event that the system is unable to provide a response time that is close to instantaneously, it is necessary to employ visual progression, for example, in the form of a percent-done indicator.

In terms of throughput—one of the key time-behavior aspects—IBM[23] has identified a range of influential factors that affect the throughput of a system in their solution. These include the specifications of the host computer, the software processing overhead, the data layout on the hard disk, the degree of hardware and software parallelism, and the types of transactions processed.

Resource utilization and capacity are also crucial considerations when evaluating performance efficiency. However, due to the limited scope of this research, they are not included in this analysis.

Part III: Design and Implementation

The Design and Implementation section provides a technical perspective on the prototype, which has been developed to validate the requirements and quality. The System Design illustrates the abstract concept of software, divided into its Logical Topology, Logical Data Model, and System Behavior. In contrast, the Technology section offers a more detailed examination of the dedicated software. Finally, a summary of the state of the art will be provided in the chapter on related work.

6. System Design

6.1. Logical Topology

The logical topology describes the access, transport, addressing of protocols, and data paths. The following section describes the system components and their internal and external connections.

6.1.1. Component Orchestration

The system components consist of three main parts: the client, the proxy, and the Solid Provider as the server. The client does not require any concept-specific logic and will be omitted from the system component view. Clients can access the public endpoint without any changes to the API. The Solid Provider should also remain unaffected and only be accessed through its HTTP APIs. When accessing the storages that exist in the Solid Provider, it is important to divide them by ownership. This approach results in two different orchestrations of the system components: one where the captured data is held in trust, and another where the data is owned by the client.

Client as Data Owner

In this approach, the main entry, such as a proxy module manager or router, delegates the network request of a monitored resource or endpoint to the proxy module in charge. The module verifies that the resource exists in storage. If so, the request module data is appended to a resource container within that storage. Figure 4 illustrates this topology.

Trustee as Data Owner

Similar to the previous approach, the main entry delegates the network request for a monitored resource or endpoint to the responsible proxy module. The module then verifies the resource’s existence in storage. If applicable, append the request module data to a resource container in the module’s storage. Figure 5 illustrates this topology, with the trustee as the data owner.

Depending on whether the capturing strategy is permanent or registration-based, both approaches may require agent identity verification. This requirement is indicated by a dashed arrow between the proxy module and the storage in both figures.

The trustee-as-data-owner approach eliminates the need for ownership verification for every requested resource and avoids potential issues with the module’s writing permissions to the client’s storage. This approach is preferred over the client-as-data-owner approach, where the module relies on enough permissions.

6.2. Logical Data Model

6.2.1. Entity-Relationship Model

The Entity-Relationship Model is based on the Solid Application Interoperability specification and describes the logical arrangement of the system’s data. This selected part of the specification can be used without further modification while the specification is still a draft. However, the current state of the specification does not fully satisfy the needs of a claiming mechanism. Figure 6 illustrates the additions to the model that are necessary to enable this mechanism. The full model can be found in Appendix A.

The model meets the requirements of the Solid Application Interoperability Specification, which is omitted in Figure 6.

However, as described in Solid Application Interoperability, the data discovery will begin from the personal profile document.

This document declares an interop:Agent, which refers to a registry set.

This declaration informs agents that the data model will be modeled according to the specification.

The registry set contains a list of all references to data registrations.

A data registration is a resource container that contains all resources in a given tree[24] and shape[25].

As the interoperability specification does not address the handling of multi-agent data or require agents to participate in the specification, some enhancements have been made to the data model. In Figure 6, the highlighted additions (bold) to the model include the requirement that Things contained by the registry set must have a unique identifier based on the claimed item, which in this case is the hashed (SHAKE256) storage URL. The Thing’s type is claim:Registry, a newly introduced Claim Vocabulary that will be explained in detail in the Custom Vocabulary section.

The claim:Verification is a resource within the observed storage that serves to verify using a verification code.

This code must correspond to the verification code within the claim:Registry to authorize the trustee’s access to the claimed data.

6.2.2. Custom Vocabulary

The vocabulary provided by the Solid Ecosystem does not cover all the necessary information that has been introduced in the data model. An custom RDF vocabulary for the claim process and logging of access has been introduced to support this.

Claim Vocabulary

Besides the ACL vocabulary, that allows the access granting of resources of a webserver, there are several other model for processing of restricted data. The ODRL Information Model[26] for instance, aims to standerize the permission, prohibition, and obligation of general content. The DPV[27] however enables expressing machine-readable metadata about the use and processing of personal data, with focus on the GDPR[28]. In order to prevent the creation of another information structure besides the model inherited from the Solid Application Interoperability and the limited options of integrating these model into the Solid Application Interoperability a custom vocabulary for the claiming mechanism has been introduced.

<#Registry>

a rdfs:Class ;

rdfs:label "A registry entry for data that has been the subject of a trustee claim"@en .

<#Verification>

a rdfs:Class ;

rdfs:label "A verification resource, located in monitored storage" .

<#trustee>

a rdf:Property ;

rdfs:label "The WebID reference of the agent requesting access to the claimed data"@en .

<#monitoredStorage>

a rdf:Property ;

rdfs:label "The observed storage reference"@en .

<#verificationResource>

a rdf:Property ;

rdfs:label "The reference to the verification resource in the monitored storage"@en .

<#verificationCode>

a rdf:Property ;

rdfs:label "A random hash in the registry and verification resource"@en .

<#claimedData>

a rdf:Property ;

rdfs:label "The reference to the resource container of all claimed data resources"@en .The claiming vocabulary presented in Listing 6, provides an illustrative example of how such a vocabulary might be constructed. However, in the implementation, this approach has not been employed in an effective manner. For the purposes of mocking, the URL references were sufficient, as the data was not fetched from the vocabulary. Nevertheless, the semantics presented in the listing are accurate. Uses of the RDF vocabulary are shown in Claim Registry (Data Model) and Verification (Data Model).

Access Log Vocabulary

The access log vocabulary is a dynamically generated vocabulary from the agent, produced in its own context.

For instance, the DPC agent generates it at http://proxy.localhost:4000/dpc/ns/log.

This enables each agent to bring its own vocabulary if necessary.

The vocabulary is a condensed and human-readable form of the HTTP Vocabulary[29].

In order to facilitate comprehension by non-expert users, the vocabulary was introduced in a simplified form, as illustrated in Listing 7.

<#AccessLog>

a rdfs:Class ;

rdfs:label "AccessLog"@en .

<#date>

a rdf:Property ;

rdfs:label "Accessed at"@en .

<#accessor>

a rdf:Property ;

rdfs:label "Accessing agent"@en .

<#application>

a rdf:Property ;

rdfs:label "Accessing application"@en .

<#resource>

a rdf:Property ;

rdfs:label "Accessed resource"@en .

<#action>

a rdf:Property ;

rdfs:label "Action"@en .The relationship between the HTTP Vocabulary and the data is that the majority of the data originates from a regular request object.

For instance, al:resource matches the http:absolutePath property.

As the vocabulary is custom, additional processing has been introduced. al:action, which matches the http:methodName, such as POST, GET, etc., has been converted to CRUD operations. al:accessor is a part of the serialized authorization header, equivalent to http:RequestHeader.

Finally, the al:application property is intended to display the application name that appears when a Solid application is requesting data access.

When granting access, the token is stored and associated with each authorized request.

However, this technique is only effective when using the Authorization Code Flow authorization method.

It is also noteworthy that al:accessor and al:application may be absent in certain instances.

To illustrate, if a resource is accessible to the general public, there is no authorized request and thus no requesting agent or logged-in Solid application.

The utilization of the RDF vocabulary is illustrated in Access Log (Data Model).

6.2.3. Information Retrieval

The DPC agent captures, manages, and presents client data. Data can only be retrieved through the DPC agent. Figure 6 shows five paths through the data structure. Two of the paths are alternative paths that lead to the same leaf of the graph. The resulting data that can be received is:

| AccessLogShape |

following path [1,1,1], [1,2,1,1] |

| AccessLog |

following path [1,1,2], [1,2,2] |

| Verification |

following path [1,2,2] |

The bracketed numbers indicate which branch to follow to access the described data.

6.2.4. Serialized Data Model

Before looking at the serialized model, it is important to understand the structure of the HTTP endpoints. The storage URLs for the HTTP APIs will begin with a storage identifier added as a suffix to the base URL. Figure 7 shows the storage URLs at the second level. The data of the corresponding agent will be represented below this node.

The two most commonly used serialization formats for RDF-based data in data-driven web-based systems are text/turtle and application/ld+json.

This inspection does not focus on data storage, as the Solid Provider is considered replaceable.

However, HTTP APIs use Turtle as the exchange format for communication, which will be displayed below.

As part of the structural hierarchy shown in Figure 7, all resources and listings refer to the data model shown in Figure 6.

Personal Profile Document (Data Model)

To participate in the Solid Application Interoperability Specification, an interop:Agent must be declared in the profile document.

This node will also refer to the registry set.

Listing 8 presents the corresponding RDF fragment.

<#id>

a interop:Agent ; (1)

interop:hasRegistrySet <http://proxy.localhost:4000/dpc/registries> . (2)| 1 | Declaration as interop:Agent. |

| 2 | Reference to the registry set. |

| Followed Path [1] |

Registry Set (Data Model)

The registry set contains an entry for each agent who has claimed data captured by the DPC agent. This captured data will be referred to as data registration. The subject of the RDF triple, however, must be unique and built based on the claimed subject. In this case, it will be the hashed storage URL. Listing 9 presents the corresponding RDF fragment.

<#ab674650> (1)

a interop:DataRegistry;

interop:hasDataRegistration <http://proxy.localhost:4000/dpc/data/74242fac/AccessLog/>.| 1 | The hashed (SHAKE256) storage URL. |

| Followed Path [1,1] |

Data Registration (Data Model)

The Shape Tree data is referenced in the data registration. As it is a container resource (see Container (Access Logs from Data Model)), all child resources will satisfy the referenced Shape Tree. Listing 10 presents the corresponding RDF fragment.

interop:DataRegistration Thing at http://proxy.localhost:4000/dpc/data/74242fac/AccessLog/<>

a interop:DataRegistration ;

interop:registeredBy <http://proxy.localhost:4000/dpc/profile/card#id> ;

interop:registeredAt "2024-04-02T16:00:09.959Z"^^xsd:dateTime ;

interop:registeredShapeTree <http://proxy.localhost:4000/dpc/ns/log.tree#AccessLogRegistrationTree> . (1)| 1 | The referenced Shape Tree. |

| Followed Path [1,1,1], [1,2,1,1] |

Shape Trees (Data Model)

Both Shape Trees, AccessLogRegistrationTree, and AccessLogTree define the contents of the referring container resource.

The AccessLogRegistrationTree defines the resources that contain Shape Tree Resources in a given shape.

The referenced Shape Expression declares the form of the shape.

Listing 11 presents the corresponding ShapeTree fragment.

PREFIX st: <http://www.w3.org/ns/shapetrees#> .

PREFIX log-shex: <http://proxy.localhost:4000/dpc/ns/log.shex#>.

<#AccessLogRegistrationTree>

a st:ShapeTree ;

st:expectsType st:Container ;

st:contains <#AccessLogTree> . (1)

<#AccessLogTree>

a st:ShapeTree ;

st:expectsType st:Resource ;

st:shape log-shex:AccessLogShape . (2)| 1 | The internal reference to AccessLogTree |

| 2 | The reference to the Shape Expression (Data Model) |

| Followed Path [1,1,1], [1,2,1,1] |

Shape Expression (Data Model)

ShEx defines the schema for every literal associated with a predicate of the vocabulary. The RDF vocabulary will not be listed further. Listing 12 presents the corresponding ShEx fragment.

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX xml: <http://www.w3.org/XML/1998/namespace>

PREFIX xsd: <http://www.w3.org/2001/XMLSchema#>

PREFIX shx: <http://www.w3.org/ns/shex#>

PREFIX log: <http://proxy.localhost:4000/dpc/ns/log#>

<#AccessLogShape> {

log:date xsd:dateTime ;

log:accessor IRI ;

log:application xsd:string ;

log:application xsd:string ;

log:resource xsd:string ;

log:action xsd:string

}| Followed Path [1,1,1], [1,2,1,1] |

Container (Access Logs from Data Model)

As explained in the Data Registration (Data Model) section, this container resource corresponds to the interop:DataRegistration definition.

The files contained within it meet the specified definitions.

For example, the file dated 2024-04-02 will be referred to as Access Log (Data Model), matching the Shape Expression (Data Model).

Listing 13 presents the corresponding RDF fragment.

<>

a ldp:Container, ldp:BasicContainer, ldp:Resource ;

ldp:contains <2024-04-02> .| Followed Path [1,1,2], [1,2,2] |

Access Log (Data Model)

The access log is a resource that contains the actual data and satisfies the shape as defined in the Shape Expression (Data Model). Listing 14 presents the corresponding RDF fragment.

ldp:Container Thing at http://proxy.localhost:4000/dpc/data/74242fac/AccessLog/2024-04-02@prefix al: <http://proxy.localhost:4000/dpc/ns/log#>.

@prefix xsd: <http://www.w3.org/2001/XMLSchema#>.

<#1712073817394>

a al:AccessLog ;

al:date "2024-04-02T16:03:37.426Z"^^xsd:dateTime ;

al:accessor "http://proxy.localhost:4000/dpc/profile/card#me" ;

al:application "Data Privacy Cockpit" ;

al:action "READ" ;

al:resource "/client/dpc" .| Followed Path [1,1,2], [1,2,2] |

Claim Registry (Data Model)

The claim registry is an custom extension of the interorp:DataRegistry within the registry set.

It refers to the root container for claimed data and the verification resource.

Listing 15 presents the corresponding RDF fragment.

<#ab674650>

a claim:Registry;

claim:trustee <http://proxy.localhost:4000/client/profile/card#me>;

claim:monitoredStorage <http://proxy.localhost:4000/client/>;

claim:verificationResource <http://proxy.localhost:4000/client/dpc#verification>;

claim:verificationCode "66097db6e9c3c234eb35f8ca66b5e4d829c6d...";

claim:claimedData <http://proxy.localhost:4000/dpc/data/74242fac/>.| Followed Path [1,2] |

Container (Claimed Data from Data Model)

This resource contains all claimed data. When using the Solid Application Interoperability Specification, it primarily refers to data registrations and their corresponding containers. Listing 16 presents the corresponding RDF fragment.

<>

a ldp:Container, ldp:BasicContainer, ldp:Resource ;

ldp:contains <2024-04-02> .| Followed Path [1,2,1] |

Verification (Data Model)

The verification resource shown in Figure 7 is the only resource stored by the client and will be used for comparison purposes. The verification code will be compared to the verification code of the claim registry. If they are equivalent, access to the claimed data will be granted. Listing 17 presents the corresponding RDF fragment.

<#verification>

a <urn:claim#Verification> ;

<urn:claim#verificationCode> "66097db6e9c3c234eb35f8ca66b5e4d829c6d..." .| Followed Path [1,2,2] |

Each of the mentioned resources must have a corresponding ACL. The lists have been intentionally omitted for simplicity. The DPC agent requires read and write access to all of these resources. The only exception is the verification resource, which only needs to be read.

6.3. System Behavior

6.3.1. Process Entries

There are three main behaviors that reflect interactions that can be executed directly or indirectly by the client: CRUD requests to a given resource, claiming log data, and discovering this data. The reference section defines subsequences that may be used in each of these interactions.

Authorised CRUD Requests

The process of authorizing a request can be divided into two steps. Firstly, an authorization token will be requested using an Authorization Client Credentials Flow or an alternative authorization process such as an Authorization Code Flow. Secondly, the CRUD request will be sent with an authorization header and the response will be provided accordingly. The key difference is that the request and response will be forwarded by the proxy instance. Figure 8 provides an illustration of this process.

Log Claiming

Network logs are captured by storage, not by WebID, and it is necessary to associate the data with a WebID at some point to make it readable to the owner. This is done by a claiming mechanism. This requires a Solid application that has access to both the user storage and the DPC storage. Both connections are handled by the DPC API server, and when the connections are established, the API initializes an verification code on behalf of the client agent to be verified by the DPC API server when it discovers the logs. Figure 10 provides an illustration of this process.

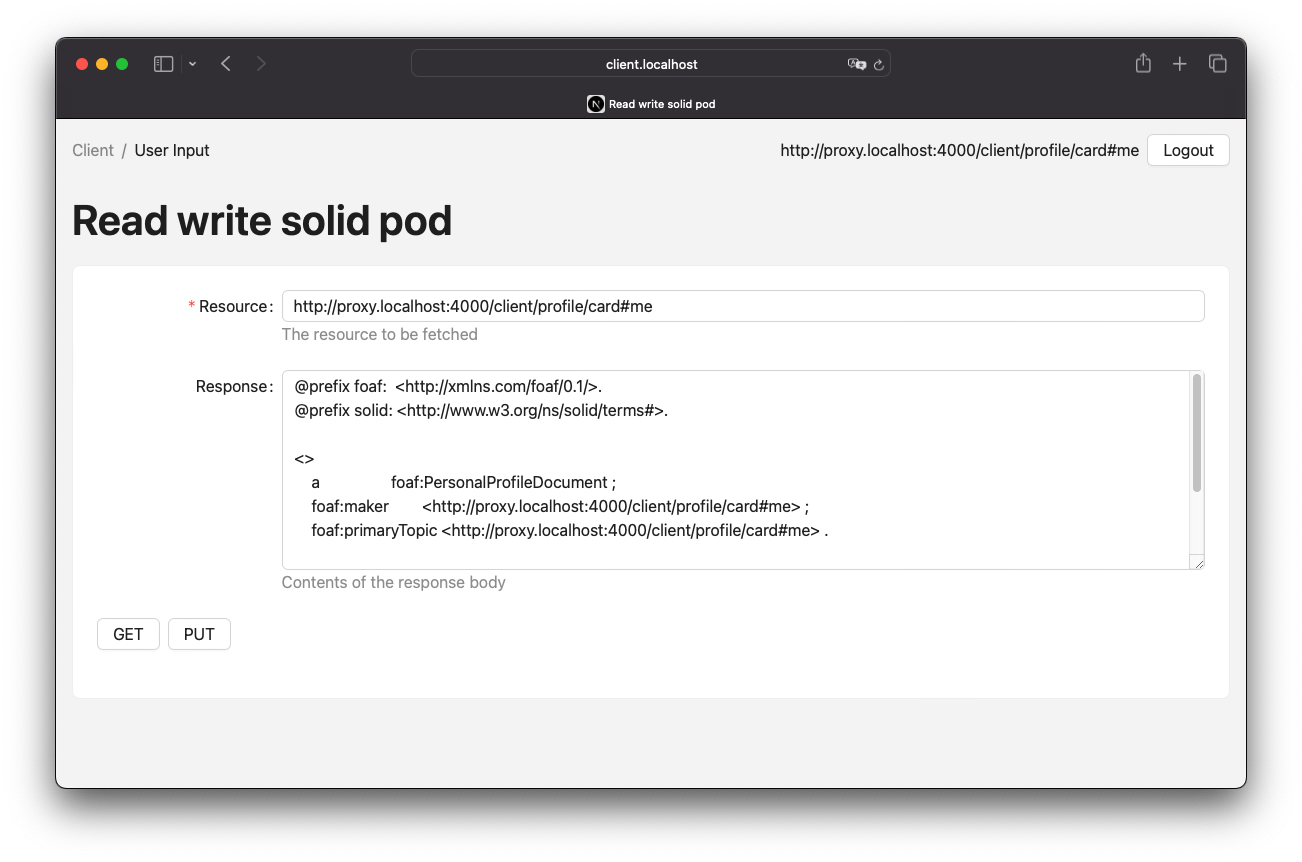

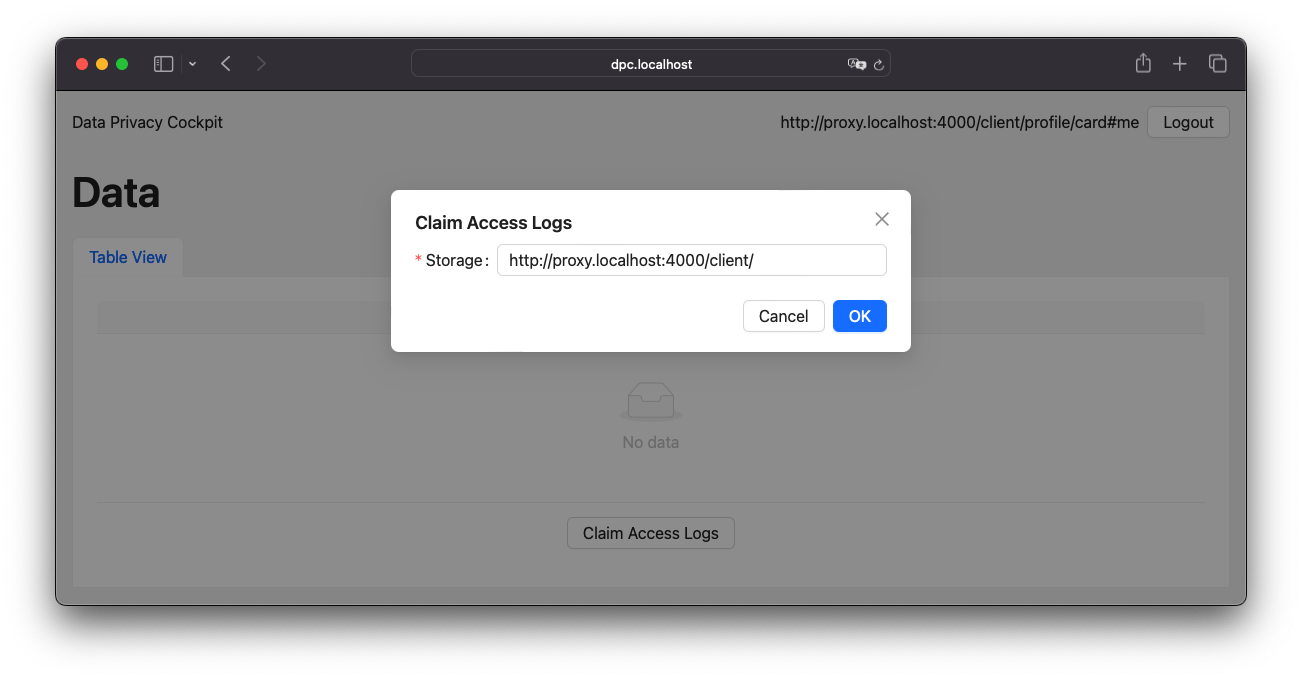

The process of claiming access logs is relatively straightforward, requiring only a single form input in the UI. When an agent is logged in, the related storage can be detected automatically. If not, the input field allows custom URL input. Upon submission, the rest of the process occurs in the background. Figure 11 presents a screenshot of this UI.

Log Discovery

The logs in the DPC API server are represented as routes. These routes will either return an empty turtle file or attempt to resolve the claim and receive the actual files from the claimed storages. Figure 12 provides an illustration of this process.

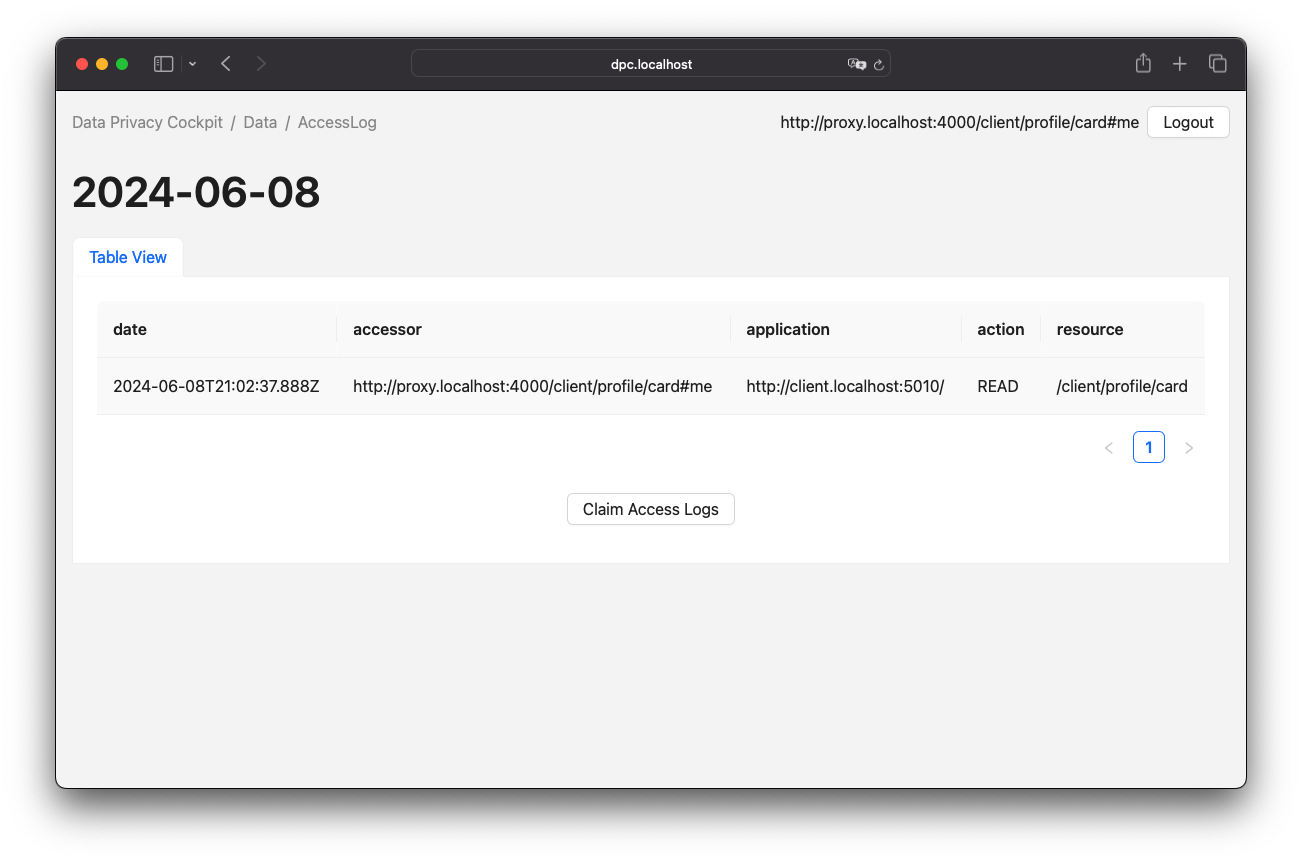

Upon successful claiming of an access log container, the agent is presented with a view of the logged entries. This view is represented by a table, as illustrated in Figure 13.

6.3.2. Process References

Authorization Client Credentials Flow

The authorization client credentials flow, is a authorization technique defined in RFC 6749, Section 4.4[30].

To obtain the authorization token, send a POST request to the authorization server with the client ID and secret in the authentication header.

It is also necessary to set the grant type to client_credentials and the scope to webid.

The proxy will forward requests as every CRUD request because the authorization server is not directly accessible.

Figure 14 provides an illustration of this process.

Authorization Code Flow

Another authorization technique is the authorization code flow, as defined in RFC 6749, Section 4.1[31]. It is important to note that this technique differs from a Authorization Client Credentials Flow, especially in the way that redirects are part of this flow. This means that user inputs are required in this technique and they cannot run automated.

Forwarded Request

Request forwarding is quite simple, the proxy receives a CRUD request that is passed through the server. The returning server response will take the path back to the original requester. Since the requester can be the proxy itself, there needs to be some kind of guard to prevent infinite recursive calls. If the requester is someone other than the proxy, the Data Privacy Cockpit middleware can be executed. In certain cases, it may be necessary to read and evaluate the server response, which can be done during a response interception[32] step. In this process, a pair of client ID and the name of the registered web application, which were submitted during the OIDC process, is stored. This information can be utilized in authorized requests by processing the authorization token and retrieving the client ID from the store to obtain the corresponding application name. Figure 15 provides an illustration of this process.

DPC Middleware

The Data Privacy Cockpit is a Solid application that requires a dedicated agent and client credentials. The agent must log in before any other actions can be executed. If successful, the container resources of the requested resource will be searched until the corresponding storage is found or no more container resources are left to search. If a storage is found, access logs will be created or updated. Figure 16 provides an illustration of this process.

Lookup Claim Data in Registry

The process of retrieving claimed data follows the data discovery outlined in the Solid Application Interoperability specification. If the data does not already exist, it will be created. Finally, the registry data will be filtered from the set of data and returned. Figure 17 provides an illustration of this process.

Create Dynamic Namespace

A process will be initiated to create the Access Log Vocabulary and related ShapeTree and ShEx resources on the server in a dynamic manner during runtime. This process will occur within the individual storage resource of the module agent. Furthermore, the ACL resources will be added with access privileges set to public accessibility.

Create or Update Dataset

The update of a dataset begins with a test to determine if the resource already exists on the server. If it does, it will be received as a dataset. Otherwise, a new dataset will be created. The dataset will be enriched with new data and stored on the server. Figure 18 provides an illustration of this process.

Initialize a Verification Code

To initialize a verification code, start by generating a random key. The DPC API will store the verification code, storage, WebID, and additional data in a location accessible to the DPC agent for later verification. If the DPC API cannot access the client’s storage, the process will terminate without adding data to the DPC storage. Figure 19 provides an illustration of this process.

Write Verification Code to Client Storage

Writing the verification code consists of two steps. The first step is to write the verification code to the client’s storage. Since the code must be read by the DPC agent, the second step is to grant read permissions for the agent. Figure 20 provides an illustration of this process.

Write Verification Code to DPC Storage

Before writing the verification code to the DPC storage, the agent must first verify their identity. After authorization, a new claim containing the verification code and associated storage will be added to the list of claims, along with the storage-related claims in the registry. Figure 21 provides an illustration of this process.

Get Claimed Resource

The process of obtaining a claimed resource will be managed by the DPC agent. The WebID from the active client session will be used to retrieve the claims from the registry, along with the storage and verification code for that claim. The DPC agent will then retrieve the verification code from the storage. If both verification codes match, the request will be forwarded by the DPC agent. Figure 22 provides an illustration of this process.

7. Technology

7.1. Technology Stack

The technological stack is entirely based on ECMAScript respectively TypeScript. Server-side scripts will be executed in the Node.js runtime environment, while client-side scripts will utilise the corresponding engine of the browser.

7.1.1. Project Structure

The project was created as a multi-package repository, with pnpm [33] serving as the package manager for Node.js-based projects.

A multi-package repository is a repository that is used to group a variety of packages and artifacts that are maintained in a single repository.

The initial two levels of the project directory, including the packages contained within this project repository, are illustrated in Figure 23.

Metadata directories and files are excluded from this diagram.

Node child packages are stored in the ./apps directory, the ./packages directory, and the ./tests/benchmark directory.

These directories contain the source code for the experimental prototype.

The ./docs directory contains all documents related to the research.

The ./scripts directory contains custom build scripts that are used to produce the project’s artifacts.

The ./tests/http directory contains simple HTTP test files, which are used for singular tests.

The Node.js packages contained in the ./packages folder are generic source code fragments utilized in the ./apps packages.

The ./packages/core folder contains the most basic data, which is used in nearly every package.

In contrast, the ./packages/ui folder contains data relevant to the user interface or general view logic.

These packages are required by the ./apps/client and ./apps/dpc packages, as they represent applications that an actual user can interact with.

The ./apps/client package can be used to test client requests, while the ./apps/dpc package is used to view the monitoring data captured by the DPC middleware.

The middleware, however, is part of the ./apps/proxy package, which delegates the ./apps/server package, a wrapper package for the CSS, to the Node.js process.

The ./tests/benchmark package is a wrapper package for Apache JMeter, which is called from the Node.js process.

7.1.2. Third-Party Software

A variety of packages are utilised in the experimental prototype, with two packages of particular significance for this study: the Community Solid Server[34] and Inrupt JavaScript Client Libraries[35].

The Community Solider Server is a modular implementation of the Solid Protocol, which allows for a variety of configuration options due to its modularity. With the exception of the configurations that must be changed for the Experiments, the default configurations have been applied, as generated by the Community Solider Server configuration generator[36]. The configuration options that have a particular influence on the tested scenario are data management and account management options. The data storage component of data management is configured as the file system by default, which may have an impact on performance due to the necessity of writing files to the hard drive. Similarly, locks are stored in the file system and are used to prevent simultaneous write operations on the same resource. The default authorization mechanism is WAC. With regard to account management, it is important to note that suffixes are used as storage container URLs, rather than subdomains. Furthermore, account management includes the identity provider, which uses OIDC. The complete configuration file can be accessed in Appendix B.

At the client side, the Inrupt JavaScript Client Library is used to access RDF resources in a uniformed way. Consequently, it is almost impossible to use CSS vendor APIs.

The network protocol HTTP is employed for all operations in this research. Security measures such as HTTPS are not considered in this analysis. Similarly, the Solid Provider has not been tested in an isolated network with a proxy, which must be the only public-accessible instance for a secure run in production environments. This potential issue has been tested with Docker[37], but it did not affect the research scenario.

7.2. Limits of the Technology Stack

The technology stack has some impact on what is possible within the Solid Protocol. The limitations mentioned here refer to the essential parts of the stack as listed in the Technology Stack.

As mentioned earlier, CSS has a locking mechanism. Locks are used to prevent concurrent writes to the same resource. According to the configuration generator documentation, disabling this mechanism can cause data corruption. Changing this mechanism to in-memory locking is not sufficient when multiple worker threads are used. Although multiple worker threads are not tested in this analysis, the file system configuration is used to ensure that variations of the tested scenario are allowed in later work. Consequently, concurrent writing to a resource is prohibited by the CSS.

The Inrupt JavaScript Client Libraries offer only limited support for appending RDF triples to an existing resource.

The guide on modifying an existing dataset[38] illustrates that it is advisable to load a resource, modify it, and then save it.

This approach results in the execution of a READ and (RE)CREATE requests, instead of a single UPDATE request.

The attempt to append data will result in an HTTP 412 Precondition Failed error response, as explained in Inrupt’s save considerations.

These considerations are particularly relevant in the context of System Design, where they influence the system’s behavior, such as the creation or update of a dataset.

7.3. Deviation from Specification

The divergence from the specifications, whether in the form of Solid Protocol or Solid Application Interoperability, is of vital importance in the analysis of the proposed DPC approach. Any variation or unspecified use of an API may result in inconsistent or corrupt behaviour with other Solid Providers, which is detrimental to the generic approach that the proposed apprach is intended to be. During the Design and Implementation of this approach, two main discrepancies in the specifications were identified.

The initial issue was identified as being related to the actual implementation of the utilized technologies.

It would be reasonable to consider this in the specification, as it is a generalized concern.

Both the proxy and server components engage in periodic self-requests for the purpose of writing access logs and verifying the identity.

Given the absence of a mechanism to prevent or limit recursive network calls, it has been necessary to implement a variety of custom mechanisms to exclude these requests from monitoring.

In the case of the Forwarded Request, this is presented as an isLoggableRequest condition.

The application logic behind this condition is comprised of three distinct tests.

One such test is that of a request header, which may or may not include a hash value.

This value is applied to requests originating from the same web instance.

Should the hash value in question align with the expected value, the request in question will not be monitored.

Another filter for self-requests is the test to determine if the request URL matches the OIDC configuration path.

This path is typically /.well-known/openid-configuration.

In the case of CSS, this could be enhanced to include all internal data, as all internal URL paths begin with /., but this technique is limited to CSS and is not a standard pattern.

Finally, all agent WebIDs, which are utilized in middleware, are to be disregarded.

As the connection is established within the middleware, the agents to be filtered are known at the time they are to be excluded.

The filters for the OIDC configuration path, as well as the WebID of the middleware agent, are obtained from the server instance.

In the absence of control over the fetcher, as is the case here, the request header hash mechanism cannot be employed.

Another issue that was identified was the necessity of writing data to container resources.

With a fundamental understanding of the CRUD operations, it would be relatively straightforward to perform a POST/PUT or PATCH request to the resource in question, for example: PUT http://proxy.localhost:4000/client/data/.

However, this is not the case with CSS, as writing to container resources involves writing metadata.

Metadata refers to the Description Resources mechanism, where a resource is linked as illustrated in Listing 2.

With the used version of CSS, only PATCH methods are permitted, resulting in a request such as PATCH http://proxy.localhost:4000/client/data/.meta for the creation of data and GET http://proxy.localhost:4000/client/data/ if the data is read.

This behavior is exclusive to CSS, yet a fundamental API to employ when Solid Application Interoperability is implemented, as the ShapeTrees are read from the resources containers, thereby inheriting the shape to the child resources.

8. Related Work

Since 2018, the Solid Community Group[39] has been responsible for the management of the Solid Project. Due to the relatively small size of the group and its limited public visibility, the level of activity within the community is correspondingly low. Consequently, research sources are limited and related work is almost non-existent. However, some work and projects do consider related ideas.